How to Draw an Animated Student Answering a Call

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

4

CLASSROOM ASSESSMENT

Assessments can be classified in terms of the way they relate to instructional activities. The term classroom assessment (sometimes called internal assessment) is used to refer to assessments designed or selected by teachers and given as an integral part of classroom instruction. They are given during or closely following an instructional activity or unit. This category of assessments may include teacher-student interactions in the classroom, observations, student products that result directly from ongoing instructional activities (called "immediate assessments"), and quizzes closely tied to instructional activities (called "close assessments"). They may also include formal classroom exams that cover the material from one or more instructional units (called "proximal assessments").1 This category may also include assessments created by curriculum developers and embedded in instructional materials for teacher use.

In contrast, external assessments are designed or selected by districts, states, countries, or international bodies and are typically used to audit or monitor learning. External assessments are usually more distant in time and context from instruction. They may be based on the content and skills defined in state or national standards, but they do not necessarily reflect the specific content that was covered in any particular classroom. They are typically given at a time that is determined by administrators, rather than by the classroom teacher. This category includes such assessments as the statewide science tests required by the No Child

___________

1This terminology is drawn from Ruiz-Primo et al. (2002) and Pellegrino (2013).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

Left Behind Act or other accountability purposes (called "distal assessments"), as well as national and international assessments: the National Assessment of Educational Progress and the Programme for International Student Assessment (called "remote assessments"). Such external assessments and their monitoring function are the subject of the next chapter.

In this chapter, we illustrate the types of assessment tasks that can be used in the classroom to meet the goals of A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas (National Research Council, 2012a, hereafter referred to as "the framework") and the Next Generation Science Standards: For States, By States (NGSS Lead States, 2013). We present example tasks that we judged to be both rigorous and deep probes of student capabilities and also to be consistent with the framework and the Next Generation Science Standards (NGSS). We discuss external assessments in Chapter 5 and the integration of classroom and external assessments into a coherent system in Chapter 6. The latter chapter argues that an effective assessment system should include a variety of types of internal and external assessments, with each designed to fulfill complementary functions in assessing achievement of the NGSS performance objectives.

Our starting point for looking in depth at classroom assessment is the analysis in Chapter 2 of what the new science framework and the NGSS imply for assessment. We combine these ideas with our analysis in Chapter 3 of current approaches to assessment design as we consider key aspects of classroom assessment that can be used as a component in assessment of the NGSS performance objectives.

ASSESSMENT PURPOSES: FORMATIVE OR SUMMATIVE

Classroom assessments can be designed primarily to guide instruction (formative purposes) or to support decisions made beyond the classroom (summative purposes). Assessments used for formative purposes occur during the course of a unit of instruction and may involve both formal tests and informal activities conducted as part of a lesson. They may be used to identify students' strengths and weaknesses, assist educators in planning subsequent instruction, assist students in guiding their own learning by evaluating and revising their own work, and foster students' sense of autonomy and responsibility for their own learning (Andrade and Cizek, 2010, p. 4). Assessments used for summative purposes may be administered at the end of a unit of instruction. They are designed to provide evidence of achievement that can be used in decision making, such as assigning grades; making promotion

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

or retention decisions; and classifying test takers according to defined performance categories, such as "basic," "proficient," and "advanced" (levels often used in score reporting) (Andrade and Cizek, 2010, p. 3).

The key difference between assessments used for formative purposes and those used for summative purposes is in how the information they provide is to be used: to guide and advance learning (usually while instruction is under way) or to obtain evidence of what students have learned for use beyond the classroom (usually at the conclusion of some defined period of instruction). Whether intended for formative or summative purposes, evidence gathered in the classroom should be closely linked to the curriculum being taught. This does not mean that the assessment must use the formats or exactly the same material that was presented in instruction, but rather that the assessment task should directly address the concepts and practices to which the students have been exposed.

The results of classroom assessments are evaluated by the teacher or sometimes by groups of teachers in the school. Formative assessments may also be used for reflection among small groups of students or by the whole class together. Classroom assessments can play an integral role in students' learning experiences while also providing evidence of progress in that learning. Classroom instruction is the focus of the framework and the NGSS, and it is classroom assessment—which by definition is integral to instruction—that will be the most straightforward to align with NGSS goals (once classroom instruction is itself aligned with the NGSS).

Currently, many schools and districts administer benchmark or interim assessments, which seem to straddle the line between formative and summative purposes (see Box 4-1). They are formative in the sense that they are used for a diagnostic function intended to guide instruction (i.e., to predict how well students are likely to do on the end-of-year tests). However, because of this purpose, the format they use resembles the end-of-year tests rather than other types of internal assessments commonly used to guide instruction (such as quizzes, classroom dialogues, observations, or other types of immediate assessment strategies that are closely connected to instruction). Although benchmark and interim assessments serve a purpose, we note that they are not the types of formative assessments that we discuss in relation to the examples presented in this chapter or that are advocated by others (see, e.g., Black and Wiliam, 2009; Heritage, 2010; Perie et al., 2007). Box 4-1 provides additional information about these types of assessments.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

BOX 4-1

BENCHMARK AND INTERIM ASSESSMENTS

Currently, many schools and districts administer benchmark or interim assessments, which they treat as formative assessments. These assessments use tasks that are taken from large-scale tests given in a district or state or are very similar to tasks that have been used in those tests. They are designed to provide an estimate of students' level of learning, and schools use them to serve a diagnostic function, such as to predict how well students will do on the end-of-year tests.

Like the large-scale tests they closely resemble, benchmark tests rely heavily on multiple-choice items, each of which tests a single learning objective. The items are developed to provide only general information about whether students understand a particular idea, though sometimes the incorrect choices in a multiple-choice item are designed to probe for particular common misconceptions. Many such tasks would be needed to provide solid evidence that students have met the performance expectations for their grade level or grade band.

Teachers use these tests to assess student knowledge of a particular concept or a particular aspect of practice (e.g., control of variables), typically after teaching a unit that focuses on specific discrete learning objectives. The premise behind using items that mimic typical large-scale tests is that they help teachers measure students' progress toward objectives for which they and their students will be held accountable and provide a basis for deciding which students need extra help and what the teacher needs to teach again.

CHARACTERISTICS OF NGSS-ALIGNED ASSESSMENTS

Chapter 2 discusses the implications of the NGSS for assessment, which led to our first two conclusions:

- Measuring the three-dimensional science learning called for in the framework and the Next Generation Science Standards requires assessment tasks that examine students' performance of scientific and engineering practices in the context of crosscutting concepts and disciplinary core ideas. To adequately cover the three dimensions, assessment tasks will generally need to contain multiple components (e.g., a set of interrelated questions). It may be useful to focus on individual practices, core ideas, or crosscutting concerts in the various components of an assessment task, but, together, the components need to support inferences about students' three-dimensional science learning as described in a given performance expectation (Conclusion 2-1).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

- The Next Generation Science Standards require that assessment tasks be designed so that they can accurately locate students along a sequence of progressively more complex understandings of a core idea and successively more sophisticated applications of practices and crosscutting concepts (Conclusion 2-2).

Students will likely need repeated exposure to investigations and tasks aligned to the framework and the NGSS performance expectations, guidance about what is expected of them, and opportunities for reflection on their performance to develop these proficiencies, as discussed in Chapter 2. The kind of instruction that will be effective in teaching science in the way the framework and the NGSS envision will require students to engage in science and engineering practices in the context of disciplinary core ideas—and to make connections across topics through the crosscutting ideas. Such instruction will include activities that provide many opportunities for teachers to observe and record evidence of student thinking, such as when students develop and refine models; generate, discuss, and analyze data; engage in both spoken and written explanations and argumentation; and reflect on their own understanding of the core idea and the subtopic at hand (possibly in a personal science journal).

The products of such instruction form a natural link to the characteristics of classroom assessment that aligns with the NGSS. We highlight four such characteristics:

- the use of a variety of assessment activities that mirror the variety in NGSS-aligned instruction;

- tasks that have multiple components so they can yield evidence of three-dimensional learning (and multiple performance expectations);

- explicit attention to the connections among scientific concepts; and

- the gathering of information about how far students have progressed along a defined sequence of learning.

Variation in Assessment Activities

Because NGSS-aligned instruction will naturally involve a range of activities, classroom assessment that is integral to instruction will need to involve a corresponding variation in the types of evidence it provides about student learning. Indeed, the distinction between instructional activities and assessment activities may be blurred, particularly when the assessment purpose is formative. A classroom

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

assessment may be based on a classroom discussion or a group activity in which students explore and respond to each other's ideas and learn as they go through this process.

Science and engineering practices lend themselves well to assessment activities that can provide this type of evidence. For instance, when students are developing and using models, they may be given the opportunity to explain their models and to discuss them with classmates, thus providing the teacher with an opportunity for formative assessment reflection (illustrated in Example 4, below). Student discourse can give the teacher a window into students' thinking and help to guide lesson planning. A classroom assessment may also involve a formal test or diagnostic quiz. Or it may be based on artifacts that are the products of classroom activities, rather than on tasks designed solely for assessment purposes. These artifacts may include student work produced in the classroom, homework assignments (such as lab reports), a portfolio of student work collected over the course of a unit or a school year (which may include both artifacts of instruction as well as results from formal unit and end-of-course tests), or activities conducted using computer technology. A classroom assessment may occur in the context of group work or discussions, as long as the teacher ensures that all the students that need to be observed are in fact active participants. Summative assessments may also take a variety of forms, but they are usually intended to assess each student's independent accomplishments.

Tasks with Multiple Components

The NGSS performance expectations each blend a practice and, in some cases, also a crosscutting idea with an aspect of a particular core idea. In the past, assessment tasks have typically focused on measuring students' understanding of aspects of core ideas or of science practices as discrete pieces of knowledge. Progression in learning was generally thought of as knowing more or providing more complete and correct responses. Similarly, practices were intentionally assessed in a way that minimized specific content knowledge demands—assessments were more likely to ask for definitions than for actual use of the practice. Assessment developers took this approach in part to be sure they were obtaining accurate measures of clearly definable constructs.2 However, although understanding the language and termi-

___________

2"Construct" is generally used to refer to concepts or ideas that cannot be directly observed, such as "liberty." In the context of educational measurement, the word is used more specifically to refer to a particular body of content (knowledge, understanding, or skills) that an assessment

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

nology of science is fundamental and factual knowledge is very important, tasks that demand only declarative knowledge about practices or isolated facts would be insufficient to measure performance expectations in the NGSS.

As we note in Chapter 3, the performance expectations provide a start in defining the claim or inference that is to be made about student proficiency. However, it is also important to determine the observations (the forms of evidence in student work) that are needed to support the claims, and then to develop tasks or situations that will elicit the needed evidence. The task development approaches described in Chapter 3 are commonly used for developing external tests, but they can also be useful in guiding the design of classroom assessments. Considering the intended inference, or claim, about student learning will help curriculum developers and classroom assessment designers ensure that the tasks elicit the needed evidence.

As we note in Chapter 2, assessment tasks aligned with the NGSS performance expectations will need to have multiple components—that is, be composed of more than one kind of activity or question. They will need to include opportunities for students to engage in practices as a means to demonstrate their capacity to apply them. For example, a task designed to elicit evidence that a student can develop and use models to support explanations about structure-function relationships in the context of a core idea will need to have several components. It may require that students articulate a claim about selected structure-function relationships, develop or describe a model that supports the claim, and provide a justification that links evidence to the claim (such as an explanation of an observed phenomenon described by the model). A multicomponent task may include some short-answer questions, possibly some carefully designed selected-response questions, and some extended-response elements that require students to demonstrate their understandings (such as tasks in which students design an investigation or explain a pattern of data). For the purpose of making an appraisal of student learning, no single piece of evidence is likely to be sufficient; rather, the pattern of evidence across multiple components can provide a sufficient indicator of student understanding.

___________

is to measure. It can be used to refer to a very specific aspect of tested content (e.g., the water cycle) or a much broader area (e.g., mathematics).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

Making Connections

The NGSS emphasize the importance of the connections among scientific concepts. Thus, the NGSS performance expectations for one disciplinary core idea may be connected to performance expectations for other core ideas, both within the same domain or in other domains, in multiple ways: one core idea may be a prerequisite for understanding another, or a task may be linked to more than one performance expectation and thus involve more than one practice in the context of a given core idea. NGSS-aligned tasks will need to be constructed so that they provide information about how well students make these connections. For example, a task that focused only on students' knowledge of a particular model would be less revealing than one that probed students' understanding of the kinds of questions and investigations that motivated the development of the model. Example 1, "What Is Going on Inside Me?" (in Chapter 2), shows how a single assessment task can be designed to yield evidence related to multiple performance expectations, such as applying physical science concepts in a life science context. Tasks that do not address these connections will not fully capture or adequately support three-dimensional science learning.

Learning as a Progression

The framework and the NGSS address the process of learning science. They make clear that students should be encouraged to take an investigative stance toward their own and others' ideas, to be open about what they are struggling to understand, and to recognize that struggle as part of the way science is done, as well as part of their own learning process. Thus, revealing students' emerging capabilities with science practices and their partially correct or incomplete understandings of core ideas is an important function of classroom assessment. The framework and the NGSS also postulate that students will develop disciplinary understandings by engaging in practices that help them to question and explain the functioning of natural and designed systems. Although learning is an ongoing process for both scientists and students, students are emerging practitioners of science, not scientists, and their ways of acting and reasoning differ from those of scientists in important ways. The framework discusses the importance of seeing learning as a trajectory in which students gradually progress in the course of a unit or a year, and across the whole K-12 span, and organizing instruction accordingly.

The first example in this chapter, "Measuring Silkworms" (also discussed in Chapter 3), illustrates how this idea works in an assessment that is embedded in a larger instructional unit. As they begin the task, students are not competent data

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

analysts. They are unaware of how displays can convey ideas or of professional conventions for display and the rationale for these conventions. In designing their own displays, students begin to develop an understanding of the value of these conventions. Their partial and incomplete understandings of data visualization have to be explicitly identified so teachers can help them develop a more general understanding. Teachers help students learn about how different mathematical practices, such as ordering and counting data, influence the shapes the data take in models. The students come to understand how the shapes of the data support inferences about population growth.

Thus, as discussed in Chapter 2, uncovering students' incomplete forms of practice and understanding is critical: NGSS-aligned assessments will need to clearly define the forms of evidence associated with beginning, intermediate, and sophisticated levels of knowledge and practice expected for a particular instructional sequence. A key goal of classroom assessments is to help teachers and students understand what has been learned and what areas will require further attention. NGSS-aligned assessments will also need to identify likely misunderstandings, productive ideas of students that can be built upon, and interim goals for learning.

The NGSS performance expectations are general: they do not specify the kinds of intermediate understandings of disciplinary core ideas students may express during instruction nor do they help teachers interpret students' emerging capabilities with science practices or their partially correct or incomplete understanding. To teach toward the NGSS performance expectations, teachers will need a sense of the likely progression at a more micro level, to answer such questions as:

- For this unit, where are the students expected to start, and where should they arrive?

- What typical intermediate understandings emerge along this learning path?

- What common logical errors or alternative conceptions present barriers to the desired learning or resources for beginning instruction?

- What new aspects of a practice need to be developed in the context of this unit?

Classroom assessment probes will need to be designed to generate enough evidence about students' understandings so that their locations on the intended pathway can be reliably determined, and it is clear what next steps (instructional activities) are needed for them to continue to progress. As we note in Chapter 2,

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

only a limited amount of research is available to support detailed learning progressions: assessment developers and others who have been applying this approach have used a combination of research and practical experience to support depictions of learning trajectories.

SIX EXAMPLES

We have identified six example tasks and task sets that illustrate the elements needed to assess the development of three-dimensional science learning. As noted in Chapter 1, they all predate the publication of the NGSS. However, the constructs being measured by each of these examples are similar to those found in the NGSS performance expectations. Each example was designed to provide evidence of students' capabilities in using one or more practices as they attempt to reach and present conclusions about one or more core ideas: that is, all of them assess three-dimensional learning. Table 1-1 shows the NGSS disciplinary core ideas, practices, and crosscutting ideas that are closest to the assessment targets for all of the examples in the report.3

We emphasize that there are many possible designs for activities or tasks that assess three-dimensional science learning—these six examples are only a sampling of the possible range. They demonstrate a variety of approaches, but they share some common attributes. All of them require students to use some aspects of one or more science and engineering practices in the course of demonstrating and defending their understanding of aspects of a disciplinary core idea. Each of them also includes multiple components, such as asking students to engage in an activity, to work independently on a modeling or other task, and to discuss their thinking or defend their argument.

These examples also show how one can use classroom work products and discussions as formative assessment opportunities. In addition, several of the examples include summative assessments. In each case, the evidence produced provides teachers with information about students' thinking and their developing understanding that would be useful for guiding next steps in instruction. Moreover, the time students spend in doing and reflecting on these tasks should

___________

3The particular combinations in the examples may not be the same as NGSS examples at that grade level, but each of these examples of classroom assessment involves integrated knowledge of the same general type as the NGSS performance expectations. However, because they predate the NGSS and its emphasis on crosscutting concepts, only a few of these examples include reference to a crosscutting concept, and none of them attempts to assess student understanding of, or disposition to invoke, such concepts.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

be seen as an integral part of instruction, rather than as a stand-alone assessment task. We note that the example assessment tasks also produce a variety of products and scorable evidence. For some we include illustrations of typical student work, and for others we include a construct map or scoring rubric used to guide the data interpretation process. Both are needed to develop an effective scoring system.

Each example has been used in classrooms to gather information about particular core ideas and practices. The examples are drawn from different grade levels and assess knowledge related to different disciplinary core ideas. Evidence from their use documents that, with appropriate prior instruction, students can successfully carry out these kinds of tasks. We describe and illustrate each of these examples below and close the chapter with general reflections about the examples, as well as our overall conclusions and recommendations about classroom assessment.

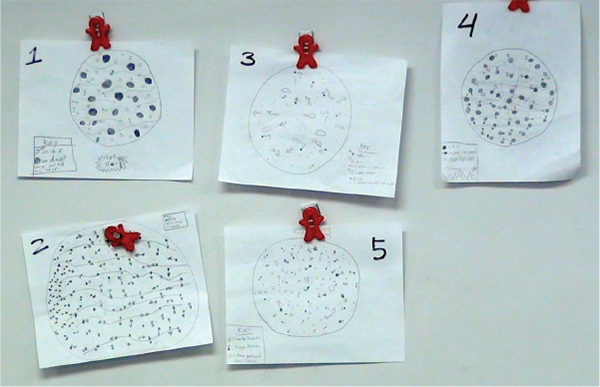

Example 3: Measuring Silkworms

The committee chose this example because it illustrates several of the characteristics we argue an assessment aligned with the NGSS must have: in particular, it allows the teacher to place students along a defined learning trajectory (see Figure 3-13 in Chapter 3), while assessing both a disciplinary core idea and a crosscutting concept.4 The assessment component is formative, in that it helps the teacher understand what students already understood about data display and to adjust the instruction accordingly. This example, in which 3rd-grade students investigated the growth of silkworm larvae, first assesses students' conceptions of how data can be represented visually and then engages them in conversations about what different representations of the data they had collected reveal. It is closely tied to instruction—the assessment is embedded in a set of classroom activities.

The silkworm scenario is designed so that students' responses to the tasks can be interpreted in reference to a trajectory of increasingly sophisticated forms of reasoning. A construct map displayed in Figure 3-13 shows developing conceptions of data display. Once the students collect their data (measure the silkworms) and produce their own ways of visually representing their findings, the teacher uses the data displays as the basis for a discussion that has several objectives.

___________

4This example is also discussed in Chapter 3 in the context of using construct modeling for task design.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

The teacher uses the construct map to identify data displays that demonstrate several levels on the trajectory. In a whole-class discussion, she invites students to consider what the different ways of displaying the data "show and hide" about the data and how they do so. During this conversation, the students begin to appreciate the basis for conventions about display.5 For example, in their initial attempt at representing the data they have collected, many of the students draw icons to resemble the organisms that are not of uniform size (see Figure 3-14 in Chapter 3). The mismatches between their icons and the actual relative lengths of the organisms become clear in the discussion. The teacher also invites students to consider how using mathematical ideas (related to ordering, counting, and intervals) helped them develop different shapes to represent the same data.

The teacher's focus on shape is an assessment of what is defined as the crosscutting concept of patterns in the framework and the NGSS. These activities also cultivate the students' capacity to think at a population level about the biological significance of the shapes, as they realize what the different representations of the measurements they have taken can tell them. Some of the student displays make a bell-like shape more evident, which inspires further questions and considerations in the whole-class discussion (see Figure 3-15 in Chapter 3): students notice that the tails of the distribution are comparatively sparse, especially for the longer larvae, and wonder why. As noted in Chapter 3, they speculate about the possible reasons for the differences, which leads to a discussion and conclusions about competition for resources, which in turn leads them to consider not only individual silkworms, but the entire population of silkworms. Hence, this assessment provides students with opportunities for learning about representations, while also providing the teacher with information about their understanding of a crosscutting concept (pattern) and disciplinary core concepts (population-level descriptions of variability and the mechanisms that produce it).

Example 4: Behavior of Air

The committee chose this example to show the use of classroom discourse to assess student understanding. The exercise is designed to focus students' attention on a particular concept: the teacher uses class discussion of the students' models of air particles to identify misunderstandings and then support students in collaboratively resolving them. This task assesses both students' understanding of the concept and their proficiency with the practices of modeling and developing oral

___________

5This is a form of meta-representational competence; see diSessa (2004).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

arguments about what they have observed. This assessment is used formatively and is closely tied to classroom instruction.

Classroom discussions can be a critical component of formative assessment. They provide a way for students to engage in scientific practices and for teachers to instantly monitor what the students do and do not understand. This example, from a unit for middle school students on the particle nature of matter, illustrates how a teacher can use discussions to assess students' progress and determine instructional next steps.6

In this example, 6th-grade students are asked to develop a model to explain the behavior of air. The activity leads them to an investigation of phase change and the nature of air. The example is from a single class period in a unit devoted to developing a conceptual model of a gas as an assemblage of moving particles with space between them; it consists of a structured task and a discussion guided by the teacher (Krajcik et al., 2013; Krajcik and Merritt, 2012). The teacher is aware of an area of potential difficulty for students, namely, a lack of understanding that there is empty space between the molecules of air. She uses group-developed models and student discussion of them as a probe to evaluate whether this understanding has been reached or needs further development.

When students come to this activity in the course of the unit, they have already reached consensus on several important ideas they can use in constructing their models. They have defined matter as anything that takes up space and has mass. They have concluded that gases—including air—are matter. They have determined through investigation that more air can be added to a container even when it already seems full and that air can be subtracted from a container without changing its size. They are thus left with questions about how more matter can be forced into a space that already seems to be full and what happens to matter when it spreads out to occupy more space. The students have learned from earlier teacher-led class discussions that simply stating that the gas changes "density" is not sufficient, since it only names the phenomenon—it does not indicate what actually makes it possible for differing amounts of gas to expand or contract to occupy the same space.

In this activity, students are given a syringe and asked to gradually pull the plunger in and out of it to explore the air pressure. They notice the pressure

___________

6This example was drawn from research conducted on classroom enactments of the IQWST curriculum materials (Krajcik et al., 2008; Shwartz et al., 2008). In field trials of IQWST, a diverse group of students responded to the task described in this example: 43% were white/Asian and 57% were non-Asian/minority; and 4% were English learners (Banilower et al., 2010).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

against their fingers when pushing in and the resistance as they pull the plunger out. They find that little or no air escapes when they manipulate the plunger. They are asked to work in small groups to develop a model to explain what happens to the air so that the same amount of it can occupy the syringe regardless of the volume of space available. The groups are asked to provide models of the air with the syringe in three positions: see Figure 4-1. This modeling activity itself is not used as a formal assessment task; rather, it is the class discussion, in which students compare their models, that allows the teacher to diagnose the students' understanding. That is, the assessment, which is intended to be formative, is conducted through the teacher's probing of students' understandings through classroom discussion.

Figure 4-2 shows the first models produced by five groups of students to depict the air in the syringe in its first position. The teacher asks the class to discuss the different models and to try to reach consensus on how to model the behavior of air to explain their observations. The class has agreed that there should be "air particles" (shown in each of their models as dark dots) and that the particles are moving (shown in some models by the arrows attached to the dots).

Most of their models are consistent in representing air as a mixture of different kinds of matter, including air, odor, dust, and "other particles." What is not consistent in their models is what is represented as between the particles: groups 1 and 2 show "wind" as the force moving the air particles; groups 3, 4, and 5 appear to show empty space between the particles. Exactly what, if anything, is in between the air particles emerges as a point of contention as the students discuss their models. After the class agrees that the consensus model should include air particles shown with arrows to demonstrate that the particles "are coming out in different directions," the teacher draws several particles with arrows and asks what to put next into the model. The actual classroom discussion is shown in Box 4-2.

The discussion shows how students engage in several scientific and engineering practices as they construct and defend their understanding about a disciplinary core idea. In this case, the key disciplinary idea is that there must be empty space between moving particles, which allows them to move, either to become more densely packed or to spread apart. The teacher can assess the way the students have drawn their models, which reveals that their understanding is not complete. They have agreed that all matter, including gas, is made of particles that are moving, but many of the students do not understand what is in between these moving particles. Several students indicate that they think there is air between the air par-

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-1 Models for air in a syringe in three situations for Example 4, "Behavior of Air."

SOURCE: Krajcik et al. (2013). Reprinted with permission from Sangari Active Science.

ticles, since "air is everywhere," and some assert that the particles are all touching. Other students disagree that there can be air between the particles or that air particles are touching, although they do not yet articulate an argument for empty space between the particles, an idea that students begin to understand more clearly in subsequent lessons. Drawing on her observations, the teacher asks questions

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-2 First student models for Example 4, "Behavior of Air."

SOURCE: Reiser et al. (2013). Copyright by the author; used with permission.

and gives comments that prompt the students to realize that they do not yet agree on the question of what is between the particles. The teacher then uses this observation to make instructional decisions. She follows up on one student's critique of the proposed addition to the consensus model to focus the students on their disagreement and then sends the class back into their groups to resolve the question.

In this example, the students' argument about the models plays two roles: it is an opportunity for students to defend or challenge their existing ideas, and it is an opportunity for the teacher to observe what the students are thinking and to decide that she needs to pursue the issue of what is between the particles of air. It is important to note that the teacher does not simply bring up this question, but instead uses the disagreement that emerges from the discussion as the basis for the question. (Later interviews with the teacher reveal that she had in fact anticipated that the empty space between particles would come up and was prepared to take advantage of that opportunity.) The discussion thus provides insights into stu-

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

dents' thinking beyond their written (and drawn) responses to a task. The models themselves provide a context in which the students can clarify their thinking and refine their models in response to the critiques, to make more explicit claims to explain what they have observed. Thus, this activity focuses their attention on key explanatory issues (Reiser, 2004).

This example also illustrates the importance of engaging students in practices to help them develop understanding of disciplinary core ideas while also giving teachers information to guide instruction. In this case, the teacher's active probing of students' ideas demonstrates the way that formative assessment strategies can be effectively used as a part of instruction. The discussion of the models not only reveals the students' understanding about the phenomenon, but also allows the teacher to evaluate progress, uncover problematic issues, and help students construct and refine their models.

Example 5: Movement of Water

The committee chose this example to show how a teacher can monitor developing understanding in the course of a lesson. "Clicker technology"7 is used to obtain individual student responses that inform teachers of what the students have learned from an activity and which are then the basis for structuring small-group discussions that address misunderstandings. This task assesses both understanding of a concept as it develops in the course of a lesson and students' discussion skills. The assessments are used formatively and are closely tied to classroom instruction.

In the previous example (Example 4), the teacher orchestrates a discussion in which students present alternative points of view and then come to consensus about a disciplinary core idea through the practice of argumentation. However, many teachers may find it challenging to track students' thinking while also promoting the development of understanding for the whole class. The example on the movement of air was developed as part of a program for helping teachers learn to lead students in "assessment conversations" (Duschl and Gitomer, 1997).8 In the

___________

7Clicker technology, also known as classroom response systems, allows students to use hand-held clickers to respond to questions from a teacher. The responses are gathered by a central receiver and immediately tallied for the teacher—or the whole class—to see.

8This example is taken from the Contingent Pedagogies Project, which provides formative assessment tools for middle schools and supports teachers in integrating assessment activities into discussions for both small groups and entire classes. Of the students who responded to the task, 46 percent were Latino. For more information, see http://contingentpedagogies.org [October 2013].

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

BOX 4-2

STUDENT-TEACHER DIALOGUE

Haley's objection: air is everywhere

Ms. B: OK. Now what?

S: Just draw like little. . . .

Haley: I think you should color the whole circle in, because dust . . . I mean air is everywhere, so. . . .

Miles: The whole circle?

Ms. B: So, I color the whole thing in.

Haley: Yeah.

Ms. B: So, if I do one like that, because I haven't seen one up here yet. If I color this whole thing in. . . .

[Ms. B colors in the whole region completely to show the air as Haley suggests.]

Michael: Then how would you show that . . . ?

Ms. B: Then ask . . . ask Haley some questions.

Students: How could that be? How would you show that?

Ms. B: Haley, people have some questions for you.

Some students object to Haley's proposal:

Frank: How would you show air?

Haley: Air is everywhere, so the air would be everything.

Ss: Yeah.

Alyssa: But then, how would you show the other molecules? I mean, you said air is everything, but then how

would you show the other . . .?

Ss: Yeah, because . . . [Multiple students talking]

Haley: What? I didn't hear your question.

Alyssa: Um, I said if . . . You said air is everywhere, right?

Haley: Yeah. . . . so, that's why you wanted to color it in. But there's also other particles other than air, like dust

and etc. and odors and things like that, so, how would you show that?

Miles: How are we going to put in the particles?

Ms. B: Haley, can you answer her?

Haley: No.

Ms. B: Why?

Haley: I don't know.

Other student: Because there is no way.

Ms. B: Why can't you answer?

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

Haley: What? I don't know.

Ms. B: Is what she's saying making sense?

Haley: Yeah.

Ms. B: What is it that you're thinking about?

Haley: Um . . . that maybe you should take . . . like, erase some of it to show the odors and stuff.

Addison: No, wait, wait!

Ms. B: All right, call on somebody else.

Addison proposes a compromise, and Ms. B pushes for clarification

Addison: Um, I have an idea. Like since air is everywhere, you might be able to like use a different colored marker and put like, um, the other molecules in there, so you're able to show that those are in there and then air is also everywhere.

Jerome: Yeah. I was gonna say that, or you could like erase it. If you make it all dark, you can just erase it and all of them will be.

Frank: Just erase some parts of the, uh . . . yeah, yeah, just to show there's something in between it.

Ms. B: And what's in between it?

Ss: The dust and the particles. Air particles. Other odors.

Miles: That's like the same thing over there.

Alyssa: No, the colors are switched.

Ms. B: Same thing over where?

Alyssa: The big one, the consensus.

Ms. B: On this one?

Alyssa: Yeah.

Ms. B: Well, what she's saying is that I should have black dots every which way, like that. [Ms. B draws the air particles touching one another in another representation, not in the consensus model, since it is Haley's idea.]

Students: No what? Yeah.

Ms. B: Right?

Students: No. Sort of. Yep.

Ms. B: OK. Talk to your partners. Is this what we want? [pointing to the air particles touching one another in the diagram]

Students discuss in groups whether air particles are touching or not, and what is between the particles if anything.

SOURCE: Reiser et al. (2013). Copyright by the author; used with permission.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

task, middle school students engage in argumentation about disciplinary core ideas in earth science. As with the previous example, the formative assessment activity is more than just the initial question posed to students; it also includes the discussion that follows from student responses to it and teachers' decisions about what to do next, after she brings the discussion to a close.

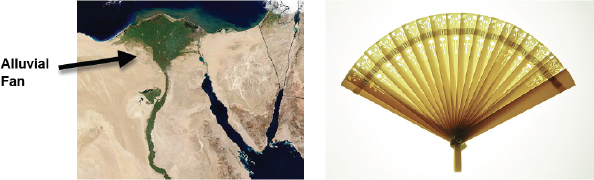

In this activity, which also takes place in a single class session, the teacher structures a conversation about how the movement of water affects the deposition of surface and subsurface materials. The activity involves disciplinary core ideas (similar to Earth's systems in the NGSS) and engages students in practices, including modeling and constructing examples. It also requires students to reason about models of geosphere-hydrosphere interactions, which is an example of the crosscutting concept pertaining to systems and system models.9

Teachers use classroom clicker technology to pose multiple-choice questions that are carefully designed to elicit students' ideas related to the movement of water. These questions have been tested in classrooms, and the response choices reflect common student ideas, including those that are especially problematic. In the course of both small-group and whole-class discussions, students construct and challenge possible explanations of the process of deposition. If students have difficulty in developing explanations, teachers can guide students to activities designed to improve their understanding, such as interpreting models of the deposition of surface and subsurface materials.

When students begin this activity, they will just have completed a set of investigations of weathering, erosion, and deposition that are part of a curriculum on investigating Earth systems.10 Students will have had the opportunity to build physical models of these phenomena and frame hypotheses about how water will move sediment using stream tables.11 The teacher begins the formative assessment activity by projecting on a screen a question about the process of deposition designed to check students' understanding of the activities they have completed: see Figure 4-3. Students select their answers using clickers.

___________

9The specific NGSS core idea addressed is similar to MS-ESS2.C: "How do the properties and movement of water shape Earth's surface and affect its systems?" The closest NGSS performance expectation is MS-ESS2-c: "Construct an explanation based on evidence for how geoscience processes have changed Earth's surface at varying time and spatial scales."

10This curriculum, for middle school students, was developed by the American Geosciences Institute. For more information, see http://www.agiweb.org/education/ies [July 2013].

11Stream tables are models of stream flows set up in large boxes filled with sedimentary material and tilted so that water can flow through.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-3 Sample question for Example 5, "Movement of Water.

The green areas marked above show the place where a river flows into an ocean. Why does this river look like a triangle (or fan) where it flows into the ocean? Be prepared to explain your response.

Answer A: Sediment is settling there as the land becomes flatter.

Answer B: The water is transporting all the sediment to the ocean, where it is being deposited.

Answer C: The water is moving faster near the mouth of the delta.

(The correct answer is A.)

SOURCE: NASA/GSFC/JPL/LaRC, MISR Science Team (2013) and Los Angeles County Museum of Art (2013).

Pairs or small groups of students then discuss their reasoning and offer explanations for their choices to the whole class. Teachers help students begin the small-group discussions by asking why someone might select A, B, or C, implying that any of them could be a reasonable response. Teachers press students for their reasoning and invite them to compare their own reasoning to that of others, using specific discussion strategies (see Michaels and O'Connor, 2011; National Research Council, 2007). After discussing their reasoning, students again vote, using their clickers. In this example, the student responses recorded using the clicker technology are scorable. A separate set of assessments (not discussed here) produces scores to evaluate the efficacy of the project as a whole.

The program materials include a set of "contingent activities" for teachers to use if students have difficulty meeting a performance expectation related to an investigation. Teachers use students' responses to decide which contingent activities are needed, and thus they use the activity as an informal formative assessment. In these activities, students might be asked to interpret models, construct explanations, and make predictions using those models as a way to deepen their understanding of Earth systems. In this example about the movement of air,

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

students who are having difficulty understanding can view an animation of deposition and then make a prediction about a pattern they might expect to find at the mouth of a river where sediment is being deposited.

The aim of this kind of assessment activity is to guide teachers in using assessment techniques to improve student learning outcomes.12 The techniques used in this example demonstrate a means of rapidly assessing how well students have mastered a complex combination of practices and concepts in the midst of a lesson, which allows teachers to immediately address areas students do not understand well. The contingent activities that provide alternative ways for students to master the core ideas (by engaging in particular practices) are an integral component of the formative assessment process.

Example 6: Biodiversity in the Schoolyard

The committee chose this example to show the use of multiple interrelated tasks to assess a disciplinary core idea, biodiversity, with multiple science practices. As part of an extended unit, students complete four assessment tasks. The first three serve formative purposes and are designed to function close to instruction, informing the teacher about how well students have learned key concepts and mastered practices. The last assessment task serves a summative purpose, as an end-of-unit test, and is an example of a proximal assessment. The tasks address concepts related to biodiversity and science practices in an integrated fashion.

This set of four assessment tasks was designed to provide evidence of 5th-grade students' developing proficiency with a body of knowledge that blends a disciplinary core idea (biodiversity; LS4 in the NGSS; see Box 2-1 in Chapter 2) and a crosscutting concept (patterns) with three different practices: planning and carrying out investigations, analyzing and interpreting data, and constructing explanations (see Songer et al., 2009; Gotwals and Songer, 2013). These tasks, developed by researchers as part of an examination of the development of complex reasoning, are intended for use in an extended unit of study.13

___________

12A quasi-experimental study compared the learning gains for students in classes that used the approach of the Contingent Pedagogies Project with gains for students in other classes in the same school district that used the same curriculum but not that approach. The students whose teachers used the Contingent Pedagogies Project demonstrated greater proficiency in earth science objectives than did students in classrooms in which teachers only had access to the regular curriculum materials (Penuel et al., 2012).

13The tasks were given to a sample of 6th-grade students in the Detroit Public School System, the majority of whom were racial/ethnic minority students (for details, see Songer et al., 2009).

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

Formative Assessment Tasks

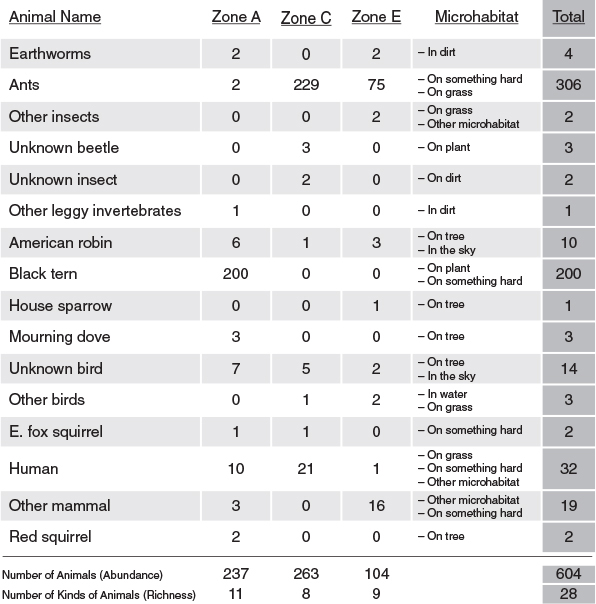

Task 1: Collect data on the number of animals (abundance) and the number of different species (richness) in schoolyard zones.

Instructions: Once you have formed your team, your teacher will assign your team to a zone in the schoolyard. Your job is to go outside and spend approximately 40 minutes observing and recording all of the animals and signs of animals that you see in your schoolyard zone during that time. Use the BioKIDS application on your iPod to collect and record all your data and observations.

In responding to this task, students use an Apple iPod to record their information. The data from each iPod is uploaded and combined into a spreadsheet that contains all of the students' data; see Figure 4-4. Teachers use data from individual groups or from the whole class as assessment information to provide formative information about students' abilities to collect and record data for use in the other tasks.

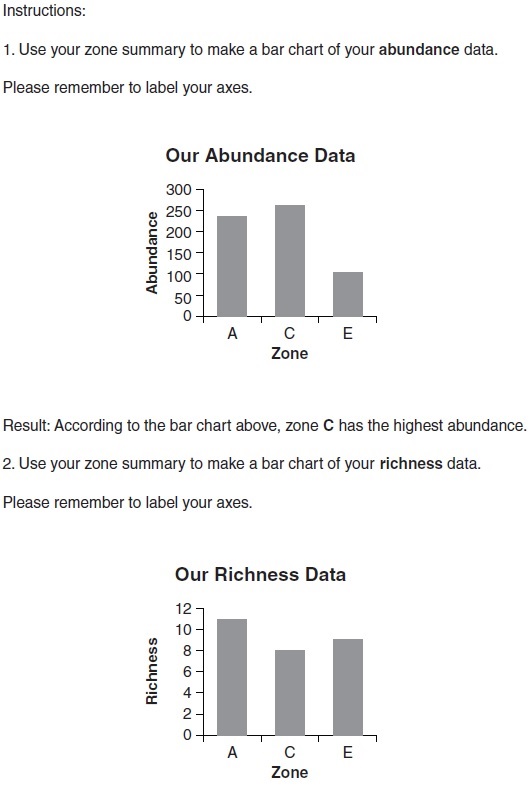

Task 2: Create bar graphs that illustrate patterns in abundance and richness data from each of the schoolyard zones.

Task 2 assesses students' ability to construct and interpret graphs of the data they have collected (an important element of the NGSS practice "analyzing and interpreting data"). The exact instructions for Task 2 appear in Figure 4-5. Teachers use the graphs the students create for formative purposes, for making decisions about further instruction students may need. For example, if students are weak on the practices, the teacher may decide to help them with drawing accurate bars or the appropriate labeling of axes. Or if the students are weak on understanding of the core idea, the teacher might review the concepts of species abundance or species richness.

Task 3: Construct an explanation to support your answer to the question: Which zone of the schoolyard has the greatest biodiversity?

Before undertaking this task, students have completed an activity that helped them understand a definition of biodiversity: "An area is considered biodiverse if it has both a high animal abundance and high species richness." The students were also given hints (reminders) that there are three key parts of an explanation: a claim, more than one piece of evidence, and reasoning. The students are also

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-4 Class summary of animal observations in the schoolyard, organized by region (schoolyard zones), for Example 6, "Biodiversity in the Schoolyard."

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-5 Instructions for Task 2 for Example 6, "Biodiversity in the Schoolyard."

NOTE: See text for discussion.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

given the definitions of relevant terms. This task allows the teacher to see how well students have understood the concept and can support their ideas about it. Instructions for Task 3 and student answers are shown in Box 4-3.

Summative Assessment Task

Task 4: Construct an explanation to support an answer to the question: Which zone of the schoolyard has the greatest biodiversity?

For the end-of-unit assessment, the task presents students with excerpts from a class data collection summary, shown in Table 4-1, and asks them to construct an explanation, as they did in Task 3. The difference is that in Task 4, the hints are removed: at the end of the unit, they are expected to show that they understand what constitutes a full explanation without a reminder. The task and coding rubric used for Task 4 are shown in Box 4-4.

The Set of Tasks

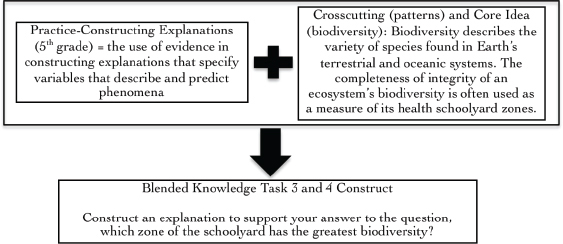

This set of tasks illustrates two points. First, using tasks to assess several practices in the context of a core idea together with a crosscutting concept can provide a wider range of information about students' progression than would tasks that focused on only one practice. Second, classroom assessment tasks in which core ideas, crosscutting concepts, and practices are integrated can be used for both formative and summative purposes. Table 4-2 shows the core idea, crosscutting concept, practices, assessment purposes, and performance expectation targets for assessment for each of the tasks. Each of these four tasks was designed to provide information about a single performance expectation related to the core idea, and each performance expectation focused on one of three practices. Figure 4-6 illustrates the way these elements fit together to identify the target for assessment of Tasks 3 and 4.

Second, the design of each task was determined by its purpose (formative or summative) and the point in the curriculum at which it was to be used. Assessment tasks may, by design, include more or less guidance for students, depending on the type of information they are intended to collect. Because learning is a process that occurs over time, a teacher might choose an assessment task with fewer guides (or scaffolds) for students as they progress through a curriculum to gather evidence of what students can demonstrate without assistance. Thus, the task developers offered a practice progression to illustrate the different levels of

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

BOX 4-3

INSTRUCTIONS AND SAMPLE STUDENT ANSWERS FOR TASK 3 IN EXAMPLE 6, "BIODIVERSITY IN THE SCHOOLYARD"

Instructions: Using what you have learned about biodiversity, the information from your class summary sheet, and your bar charts for abundance and richness, construct an explanation to answer the following scientific question:

Scientific Question: Which zone in the schoolyard has the highest biodiversity? My Explanation [figure or text box?]

Make a CLAIM: Write a complete sentence that answers the scientific question.

Zone A has the greatest biodiversity.

Hint: Look at your abundance and richness data sheets carefully.

Give your REASONING: Write the scientific concept or definition that you thought about to make your claim.

Hint: Think about how biodiversity is related to abundance and richness.

Biodiversity is related to abundance and richness because it shows the two amounts in one word.

Give your EVIDENCE: Look at your data and find two pieces of evidence that help answer the scientific question.

Hint: Think about which zone has the highest abundance and richness.

1. Zone A has the most richness.

2. Zone A has a lot of abundance.

NOTES: Student responses are shown in italics. See text for discussion.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

TABLE 4-1 Schoolyard Animal Data for Example 6 Summative Task, "Biodiversity in the Schoolyard"

| Animal Name | Zone A | Zone B | Zone C | Total |

| Pillbugs | 1 | 3 | 4 | 8 |

| Ants | 4 | 6 | 10 | 20 |

| Robins | 0 | 2 | 0 | 2 |

| Squirrels | 0 | 2 | 2 | 4 |

| Pigeons | 1 | 1 | 0 | 2 |

| Animal abundance | 6 | 14 | 16 | 36 |

| Animal richness | 3 | 5 | 3 | 5 |

guidance that tasks might include, depending on their purpose and the stage students will have reached in the curriculum when they undertake the tasks.

Box 4-5 shows a progression for the design of tasks that assess one example of three-dimensional learning: the practice of constructing explanations with one core idea and crosscutting concept. This progression design was based on studies that examined students' development of three-dimensional learning over time, which showed that students need less support in tackling assessment tasks as they progress in knowledge development (see, e.g., Songer et al., 2009).

Tasks 3 and 4, which target the same performance expectation but have different assessment purposes, illustrate this point. Task 3 was implemented midway through the curricular unit to provide formative information for the teacher on the kinds of three-dimensional learning students could demonstrate with the assistance of guides. Task 3 was classified as a Level 5 task (in terms of the progression shown in Box 4-5) and included two types of guides for the students (core idea guides in text boxes and practice guides that offer the definition of claim, evidence, and reasoning). Task 4 was classified as a Level 7 task because it did not provide students with any guides to the construction of explanations.

Example 7: Climate Change

The committee chose this flexible online assessment task to demonstrate how assessment can be customized to suit different purposes. It was designed to probe student understanding and to facilitate a teacher's review of responses. Computer software allows teachers to tailor online assessment tasks to their purpose and to the stage of learning that students have reached, by offering more or less supporting information

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

BOX 4-4

TASK AND CODING RUBRIC FOR TASK 4 IN EXAMPLE 6,

"BIODIVERSITY IN THE SCHOOLYARD"

Write a scientific argument to support your answer for the following question.

Scientific Question: Which zone has the highest biodiversity?

Coding

4 points: Contains all parts of explanation (correct claim, 2 pieces of evidence, reasoning)

3 points: Contains correct claim and 2 pieces of evidence but incorrect or no reasoning

2 points: Contains correct claim + 1 piece correct evidence OR 2 pieces correct evidence and 1 piece incorrect evidence

1 point: Contains correct claim, but no evidence or incorrect evidence and incorrect or no reasoning

Correct Responses

Claim

Correct: Zone B has the highest biodiversity.

Evidence

1. Zone B has the highest animal richness.

2. Zone B has high animal abundance.

Reasoning

Explicit written statement that ties evidence to claim with a reasoning statement: that is, Zone B has the highest biodiversity because it has the highest animal richness and high animal abundance. Biodiversity is a combination of both richness and abundance, not just one or the other.

and guidance. The tasks may be used for both formative and summative purposes: they are designed to function close to instruction.

This online assessment task is part of a climate change curriculum for high school students. It targets the performance expectation that students use geoscience data and the results from global climate models to make evidence-based

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

TABLE 4-2 Characteristics of Tasks in Example 6, "Biodiversity in the Schoolyard"

| Core Idea | Crosscutting Concepts | Practices | Purpose of Assessment | Target for Assessment: Performance Expectation |

| LS4.D Biodiversity and Humans | Patterns | Planning and carrying out investigations | Formative | Task 1. Collect data on the number of animals (abundance) and the number of different species (richness) in schoolyard zones. |

| Analyzing and interpreting data | Formative | Task 2. Create bar graphs that illustrate patterns in abundance and richness data from each of the schoolyard zones. | ||

| Constructing explanations | Formative | Task 3. Construct an explanation to support your answer to the question: Which zone of the schoolyard has the greatest biodiversity? | ||

| Constructing explanations | Summative | Task 4. Construct an explanation to support your answer to the question: Which zone of the schoolyard has the greatest biodiversity? | ||

forecasts of the impacts of climate change on organisms and ecosystems.14 This example illustrates four potential benefits of online assessment tasks:

- the capacity to present data from various external sources to students;

- the capacity to make information about the quality and range of student responses continuously available to teachers so they can be used for formative purposes;

- the possibility that tasks can be modified to provide more or less support, or scaffolding, depending on the point in the curriculum at which the task is being used; and

___________

14This performance expectation is similar to two in the NGSS ones: HS-LS2-2 and HS-ESS3-5, which cover the scientific practices of analyzing and interpreting data and obtaining, evaluating, and communicating evidence.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-6 Combining practice, crosscutting concept, and core idea to form a blended learning performance expectation, assessed in Tasks 3 and 4, for Example 6, "Biodiversity in the Schoolyard."

- the possibility that the tasks can be modified to be more or less active depending on teachers' or students' preferences.

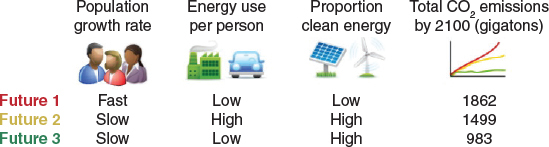

In the instruction that takes place prior to this task, students will have selected a focal species in a particular ecosystem and studied its needs and how it is distributed in the ecosystem. They will also have become familiar with a set of model-based climate projections, called Future 1, 2, and 3, that represent more and less severe climate change effects. Those projections are taken from the Intergovernmental Panel on Climate Change (IPCC) data predictions for the year 2100 (Intergovernmental Panel on Climate Change, 2007): see Figure 4-7. The materials provided online as part of the activity include

- global climate model information presented in a table showing three different IPCC climate change scenarios (shown in Figure 4-7);

- geosciences data in the form of a map of North America that illustrates the current and the predicted distribution of locations of optimal biotic and abiotic15 conditions for a species, as predicted by IPCC Future 3 scenario: see Figure 4-8; and

___________

15The biotic component of an environment consists of the living species that populate it, while the abiotic components are the nonliving influences such as geography, soil, water, and climate that are specific to the particular region.

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

BOX 4-5

PROGRESSION FOR MULTIDIMENSIONAL LEARNING TASK DESIGN

This progression covers constructing a claim with evidence and constructing explanations with and without guidance. The + and ++ symbols represent the number of guides provided in the task.

| Level 7: | Student is provided with a question and is asked to construct a scientific explanation (no guides). | |

| Level 6+: | Student is provided with a question and is asked to construct a scientific explanation (with core ideas guides only). | |

| Level 5++: | Student is provided with a question and is asked to construct a scientific explanation (with core ideas guides and guides defining claim, evidence and reasoning). | |

| Level 4: | Student is provided with a question and is asked to make a claim and back it with evidence (no guides). | |

| Level 3+: | Student is provided with a question and is asked to make a claim and back it with evidence (with core ideas guides only). | |

| Level 2++: | Student is provided with a question and is asked to make a claim and back it with evidence (with core ideas guides and guides defining claim and evidence). | |

| Level 1: | Student is provided with evidence and asked to choose appropriate claim OR student is provided with a claim and is asked to choose the appropriate evidence. | |

SOURCE: Adapted from Gotwals and Songer (2013).

- an online guide for students in the development of predictions, which prompts them as to what is needed and records their responses in a database that teachers and students can use. (The teacher can choose whether or not to allow students access to the pop-up text that describes what is meant by a claim or by evidence.)

The task asks students to make and support a prediction in answer to the question, "In Future 3, would climate change impact your focal species?" Students are asked to provide the following:

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-7 Three simplified Intergovernmental Panel on Climate Change (IPCC)-modeled future scenarios for the year 2100.

SOURCE: Adapted from Peters et al. (2012).

- a claim (the prediction) as to whether or not they believe the IPCC scenario information suggests that climate change will affect their chosen animal;

- reasoning that connects their prediction to the model-based evidence, such as noting that their species needs a particular prey to survive; and

- model-based evidence that is drawn from the information in the maps of model-based climate projections, such as whether or not the distribution of conditions needed by the animal and its food source in the future scenario will be significantly different from what it is at present.

Table 4-3 shows sample student responses that illustrate both correct responses and common errors. Students 1, 3, and 4 have made accurate predictions, and supplied reasoning and evidence; students 2, 5, and 6 demonstrate common errors, including insufficient evidence (student 2), inappropriate reasoning and evidence (student 5), and confusion between reasoning and evidence (student 6). Teachers can use this display to quickly see the range of responses in the class and use that information to make decisions about future instruction.

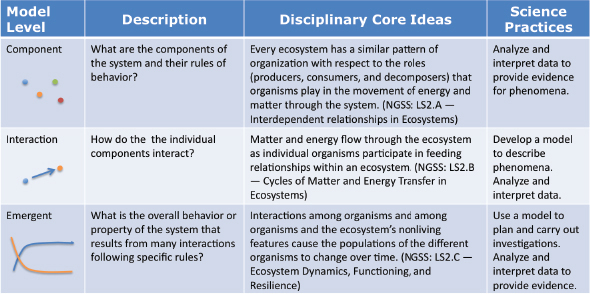

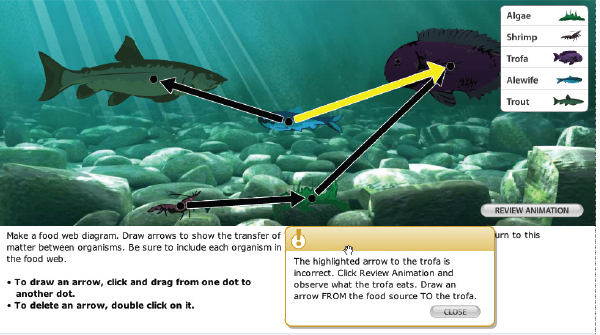

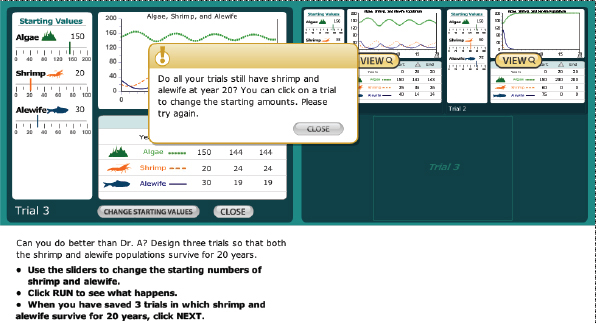

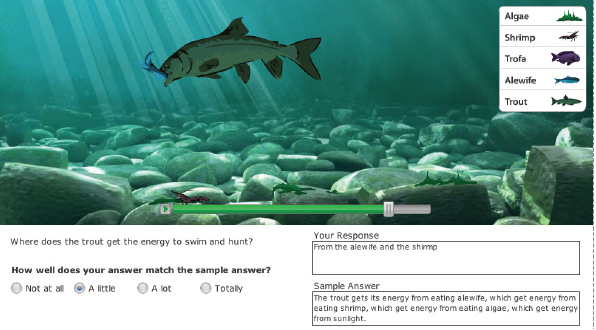

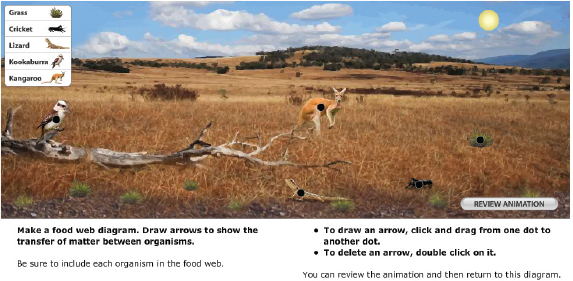

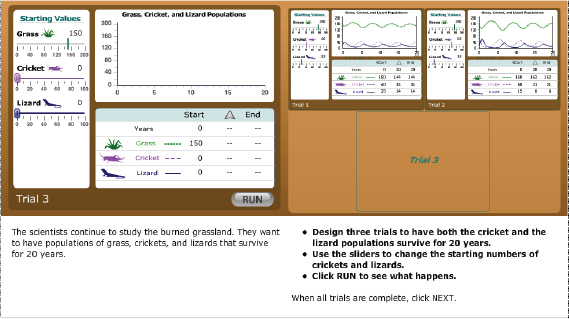

Example 8: Ecosystems

The committee chose this example, drawn from the SimScientists project, to demonstrate the use of simulation-based modules designed to be embedded in a curriculum unit to provide both formative and summative assessment information. Middle school students use computer simulations to demonstrate their understanding of core ideas about ecosystem dynamics and the progress of their

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

FIGURE 4-8 Current and predicted Future 3 distribution for the red squirrel for Example 7, "Climate Change."

SOURCE: Songer et al. (2013). Copyright by the author; used with permission.

thinking as they move from exploring ecosystem components to interactions of those components to the way systems behave. Thus, the simulations also address the crosscutting concept of systems. The assessment components function close to classroom instruction.

In this set of classroom modules, students use simulated, dynamic representations of particular ecosystems, such as a mountain lake or grasslands, to investigate features common to all ecosystems. The students investigate the roles of and relationships among species within habitats and the effects of these interactions on population levels (Quellmalz et al., 2009). Simulations of these environments can be used both to improve students' understanding of complex ecosystems and to

Suggested Citation:"4 Classroom Assessment." National Research Council. 2014. Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press. doi: 10.17226/18409.

×

TABLE 4-3 Sample Student Responses in Example 7, "Climate Change"

| Scientific Question: In Future 3, would climate change impact your focal species? | ||

| Student 1 | Claim | Climate change will effect my focal species. |

| Reasoning | The abiotic conditions will change, and the temperature will change; therefore, the habitat of my species will change. | |

| Evidence | The map shows it will move into the Western part, therefore the climate changed. | |

| Student 2 | Claim | Yes it will effect it, it will shorten the range. |

| Reasoning | When the climate changes the focal species will have to move north because it won't be able to stand the warm weather. | |

| Evidence | The map. | |

| Student 3 | Claim | Yes, climate change would effect the red-backed salamander. |

| Reasoning | Abiotic and biotic factors can cause the red-backed salamander to relocate, such as temperature, precipitation, and invasive species. | |

| Evidence | If the temperatures increase, the red-backed salamander would have to live farther north where temperatures are suitable for its survival. | |

| Student 4 | Claim | I think that climate change in Future 3 will not impact my focal species. |